Oracles from the Pleroma of Data

Generative AI and the dawning new epistemology

Not long ago, researchers at Google and the University of Nottingham announced the development of Aeneas, a programme that claims to be able to reconstruct Roman inscriptions in their entirety from small surviving fragments. Epigraphers have been doing this for centuries, of course, and it remains to be seen whether Aeneas proves better than human scholars. At first glance, Aeneas strikes me as an example of a legitimate use of machine learning in Classics – a tool that genuinely may be able to process more data than a human could in order to see - or see more quickly - patterns a human might not (if it’s as good as the researchers claim). What worries me about tools like Aeneas is not their efficacy or the ethics of the researchers who actually use them, but the wider societal expectations of AI that they feed into. ‘We can read ancient inscriptions from a single fragment now, thanks to AI’ – but it’s not as simple as that. Machine learning has an error rate, just as humans do. Yet many people seem to have internalised the notion that AI is unerring, or at the very least that it could be unerring, with sufficient data. And I don’t think academics have understood quite what a profound epistemological shift – that is, a shift in our fundamental idea of what knowledge is – these expectations could be ushering in.

Set aside your scepticism of artificial intelligence for a moment, and imagine yourself as a wide-eyed believer in the unbounded potential of AI. If Aeneas can reconstruct lost inscriptions, then why couldn’t AI begin to fill in the gaps in longer ancient texts that survive in fragmentary form? To a certain extent, this is what the technology being used on the Herculaneum scrolls does. But let’s take it a step further. Only 35 of the 142 books of Livy’s Roman history survive. We have a rough idea of what the chronology was that was covered in those 107 lost books. Couldn’t AI one day supply the lost books of Livy? All an AI needs to do is to internalise Livy’s style of writing, spewing out endless Livian sentences like a Renaissance schoolboy doing Latin composition, while being provided with prompts as to what the broad topic of a lost Livian book should be.

Now, the idea that AI (even AI that had got very good at Livian prose) could give us back the lost 107 books of Livy is self-evidently ridiculous. Or at least it should be. What should worry us is that claims like this are no longer being taken as ridiculous by believers in the potential of AI. Under our current epistemological models, any attempt to generate the lost books of Livy via AI would be a pastiche, at best. Generative AI is a tool of simulation; like the ‘Feelies’ in Huxley’s Brave New World, it’s very good at simulating the sort of features in media that make humans feel like they’re getting the real thing. But if knowledge is justified true belief (to take Descartes’ classic definition), there are very few circumstances in which Generative AI can, well, generate knowledge. It’s a simulation based on a lot of data; glorified predictive text. But what I suspect many academics have failed to grasp is that the most ardent advocates of AI, and large sections of the population who are influenced by them and uncritically consume generative AI, no longer operate within a traditional epistemological paradigm. For them, generative AI does have the capacity to generate the truth and reveal new knowledge.

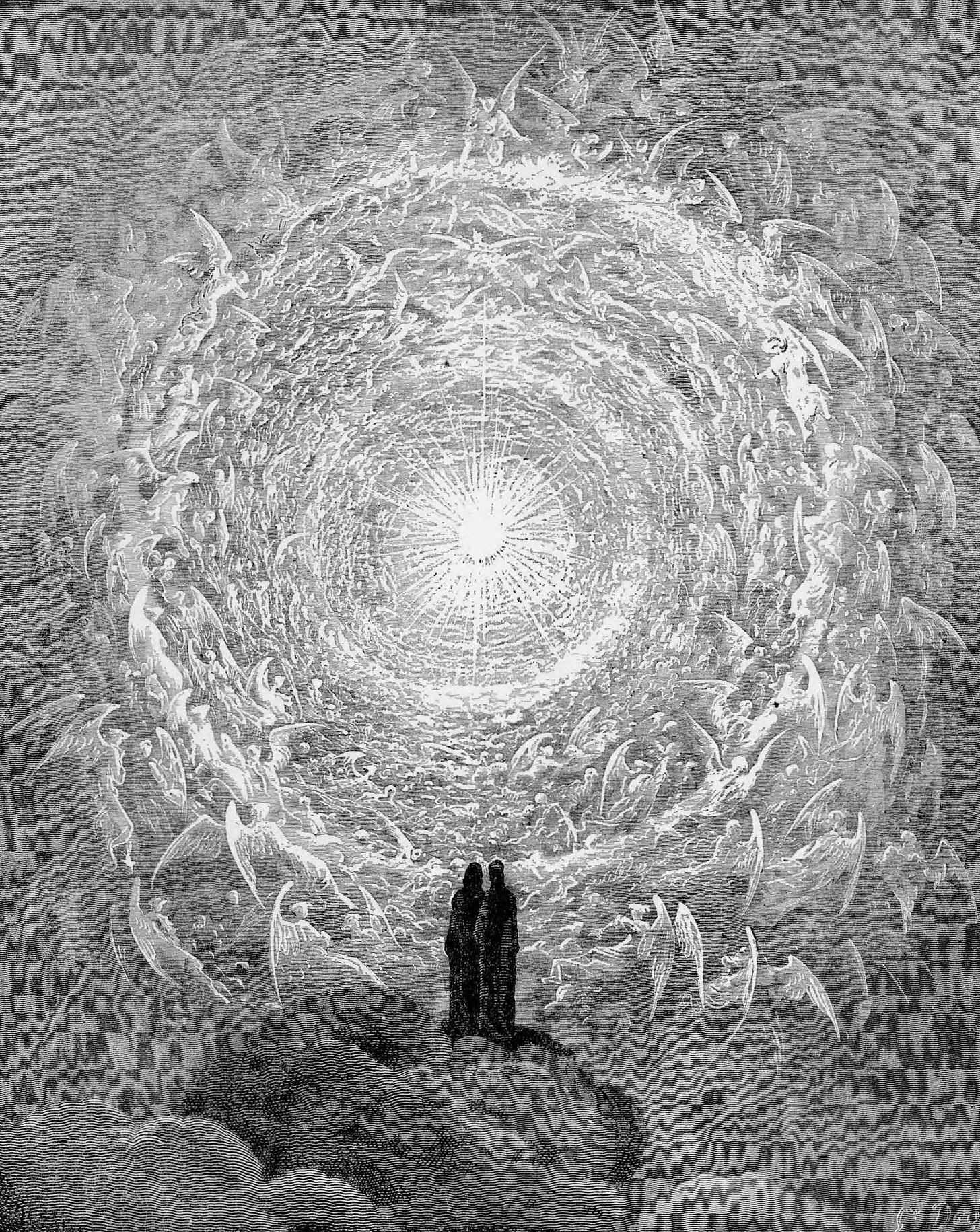

How could anyone believe this? In one sense, it is a return to a mantic concept of knowledge. Some people approach AI with an almost mystical awe and consider it somehow endowed with a spirit of greater than human intelligence. Using AI is thus an essentially mantic or divinatory process; the ghost in the machine reveals truths inaccessible to puny mortals. But even in the absence of this kind of AI-mysticism, many people seem to believe that the totality of data that AI allegedly possesses (or will soon possess) – let’s call it the pleroma, after the Gnostic word for the spiritual totality – gives AI unerring access to the truth. The totality of data, according to this unarticulated but implicit ideology, is the totality of human experience. What that means is that an AI with access to that pleroma of data reveals the truth – about anything. Within that pleroma can be found the lost thoughts of Livy, and so AI can literally restore the lost works of Livy - or Shakespeare, or Milton, or Sappho.

Here we come to the fundamental epistemological shift in whose midst we find ourselves. It is a shift away from the idea of knowledge as justified true belief, discovered by hard work and careful investigation, verified by its correspondence to evidence, and towards an idea of knowledge as the product of the pleroma of data, mediated by artificial intelligence. In other words, AI is a greater intelligence than us, and what it generates is the truth. The implications of this shift are profound, of course. It would mean a world where 107 lost books of Livy generated by AI are the lost books of Livy. It would mean a world where AI cannot ‘hallucinate’, because AI is itself the arbiter of truth; if AI seems to have erred, it must be us who are wrong, we who are misremembering the past or what we learnt in the pre-AI era. It would also mean a world without private thoughts, for if someone wants to know what a person thinks about something, they can ask a chatbot. What AI thinks you think is what you think.

This may seem extreme. But it does seem to be logical end point to the attitude to AI-generated content displayed by both the uninformed and by the most zealous advocates of AI. People already believe in huge numbers that AI can recover the ‘reality’ behind old photographs by animating, sharpening or colourising them; they already believe AI images generated from the Shroud of Turin genuinely reveal the face of Christ; they already believe the words of chatbots trained on the writings of historical figures express the actual thoughts of those historical figures. This is not simply magical thinking – the kind of hype that surrounded past overhyped technologies, from electricity to radium. Rather, it speaks of a new relationship between human beings and the truth, where the truth now emerges from a pleroma of data and AI technologies are oracles. In this new world there is no death, no distinction between past and present, no barrier between the virtual and the real, and no difference between simulation and actual experience.

Here I must admit a conflict of interest. I am a historian of belief. I am interested in the emergence of new belief systems, especially strange ones. On one level, I am fascinated by the apparent dawning of what is a quasi-religious ideology in which human intelligence is subject to the pleroma of data. And I could easily be accused of hypocrisy in viewing such a development negatively when I am always eager to study the emergence of strange belief systems in the past. But the trouble with this particular strange belief system is that it threatens my own discipline of history, the very discipline through which I try to illuminate the existence of eccentric and discarded belief-systems.

If an epistemological shift of the kind I describe is taking place, it is the first major shift since the one that occurred in the late 17th century when the epistemological paradigm that reigned until the 21st century was established. This earlier shift involved the rejection of the notion that knowledge was established by authority alone, in the absence of experience; it is a process convincingly explained, in my view, by the historian of science Brian Copenhaver. The current shift, if it is indeed happening, seems to be a return to an epistemology of authority – not in this case the authority of the ancients or the Church Fathers, but of an imagined artificial supermind that has consumed the totality of data and is now the source of truth. Except of course that it hasn’t; AI has not and cannot be in possession of all data, and it isn’t a mind and never will be. Machine learning has its place, but the omnipresence and relentless promotion of generative AI within entertainment and social media has misled millions of people as to its potential and value, leaving them unmoored from the mechanisms by which we have striven to establish knowledge and truth (albeit not straightforwardly or uncontestedly) for the last four hundred years. I don’t know what the future holds, but what we are witnessing is far more than just ignorance. Something fundamental in the relationship between human beings and what we think know (and how we think we know it) is shifting.

This seems to me an important point to emphasize. It's worth noting that reverse-engineering not only books, but people, was a thing for enthusiasts of the idea of AI long before there was any AI worth talking about.

I do think, though, that the way we are using the term 'artificial intelligence' needs some care. The idea of AIs that definitely *are* minds just like ours was always there and it has not been realized (although there is the old Turing Test question of how would you know if it had). That it *can never* be realized is a faith-based claim, very different from the claim that a large language model like ChatGPT is not, and cannot be, a mind.

You can argue that an LLM could be a component of a mind, certainly; or that it is an important step towards the emergence of 'general' AI - but then you could argue the same thing about all kinds of previous steps that went into the development of LLMs (as well as about other contemporary examples of AI that are not LLMs, like AlphaFold or Stockfish).

So that means that you could argue that statistical models were always a form of AI. Alternatively you could see current AI as a form of 'automated statistics,' part of a longer trend in research to have statistical models become more automated and opaque. Saying 'automated statistics' rather than 'artificial intelligence' would not be any more accurate, I think, but I think it would reveal deep roots to the epistemological change you describe.

I think the roots are in the concept of probability and the reluctance to engage with that concept on a deep level which leads to a tendency to use it to arrive back at a simulacrum of a binary true and false.

Thank you. As a classicist, principally of Akkadian, this is a really interesting step forward in how we think about AI in manuscript ’restoration.’